The trends continue to change each year, and you need to adapt.

If you’re still sending out the same boring newsletter or promotional offer you used 5 years ago, it’s time for you to make some improvements and adjustments.

But where do you start?

You may want to try testing a couple of different templates or designs to see which one is the most effective.

A/B testing is not strictly for people who want to update their old email strategies.

It’s great for business owners and marketers who are actively trying to keep up with the new trends as well.

Making minor changes to your subject lines, color scheme, CTA buttons, and design could drastically improve your conversions.

If you’ve never attempted to A/B test your marketing emails, I’ll show you how to get started.

Test only one hypothesis at a time

First, decide what you want to test.Once you decide what you’re testing, come up with a hypothesis.

Next, design the test to check that hypothesis.

For example, you may want to start by testing your call to action.

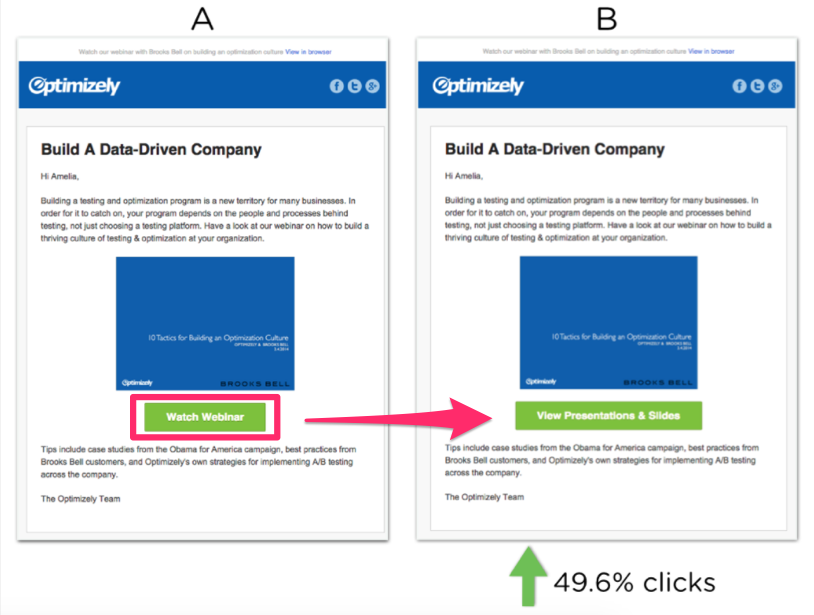

Let’s look at how Optimizely tested their CTA button.

These two messages are identical.

The only thing that changed was the wording of their call to action.

They didn’t change the color, design, heading, or text of the message.

Optimizely simply tested “Watch Webinar” against “View Presentations & Slides.”

The results were drastically different.

Subscribers clicked on the variation nearly 50% more than the control group.

You may want to run further tests on other components of the message.

So, now that Optimizely knows which variation produces the most clicks, they can proceed with testing different subject lines that can increase open rates.

Where do you start?

Before you can come up with a valid hypothesis, you may need to do some research.

Decide which component of your subject line you want to test.

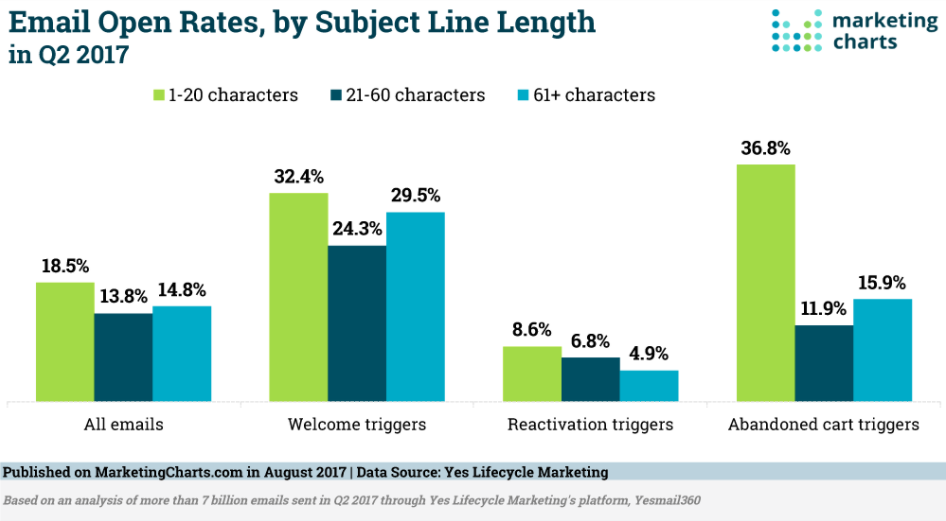

Here’s some great data from Marketing Charts.

Based on this information, you could A/B test the number of characters in your subject line.

You already know that subjects with 1-20 characters produce the most opens.

Take that one step further.

Your hypothesis could be that 11-20 characters will produce more opens than 1-10 characters.

There’s your variation.

Let’s say the first thing you tested was a CTA button, like in the Optimizely example.

Now, you can move on to the subject line.

If you tested the CTA and subject line at the same time, you wouldn’t know which one was the biggest factor in your results.

You can’t effectively test a hypothesis with multiple variables.

Testing one thing at a time will ultimately help you create the most efficient message.

How to set up your A/B email tests

All right, now that you know what to test, it’s time to create your email.How do you do this?

It depends on your email marketing service.

Not all platforms give you this option.

If your current provider doesn’t have this feature, you may want to consider finding an alternative service.

I’ll show you the step-by-step process of running an A/B test through HubSpot’s platform.

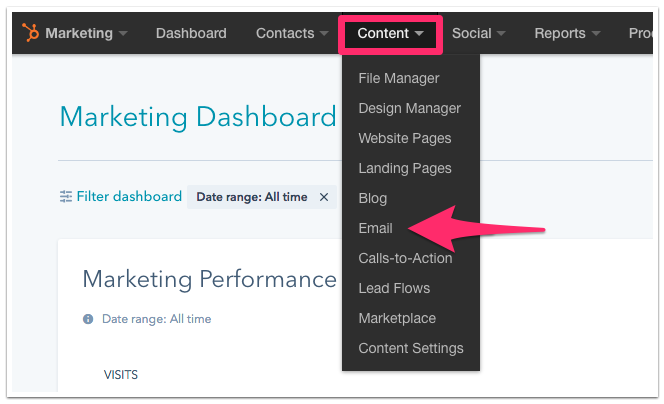

Step #1: Select “Email” from the “Content” tab of your Marketing Dashboard

Your marketing dashboard is pretty much the home page for the HubSpot account.

Just navigate to the content tab and select Email to proceed.

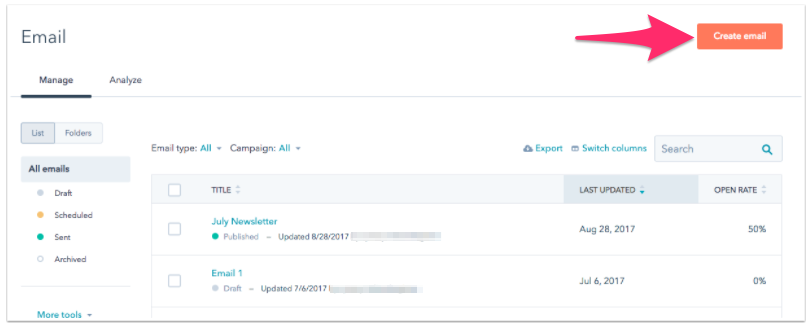

Step #2: Click “Create email”

Look for the “Create email” button in the top right corner of your page.

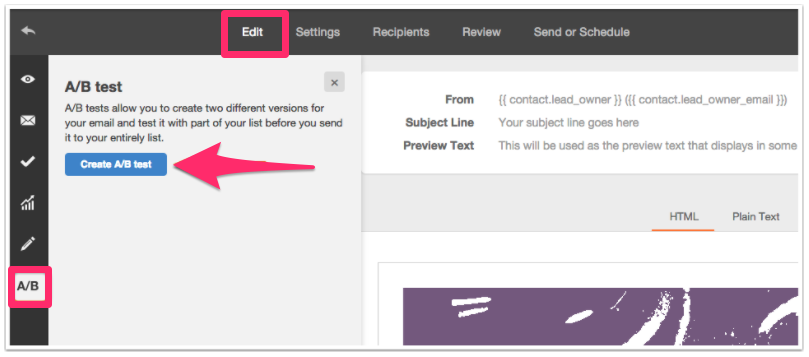

Step #3: Create your A/B test

Once you name your email campaign and select a template, next you’ll see the editing tab.

Click on the blue “Create A/B Test” button on the left side of your screen.

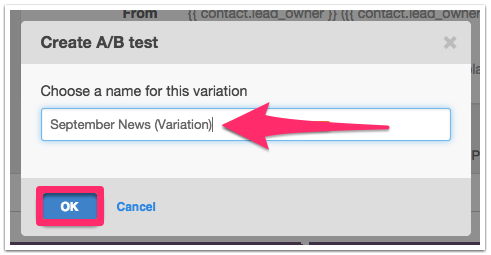

Step #4: Name the variation

By default, this popup will have the name of your campaign with “(Variation)” after it.

But you can name it something more specific based on what you’re testing.

For example, you can name it “September News CTA Button Placement” instead.

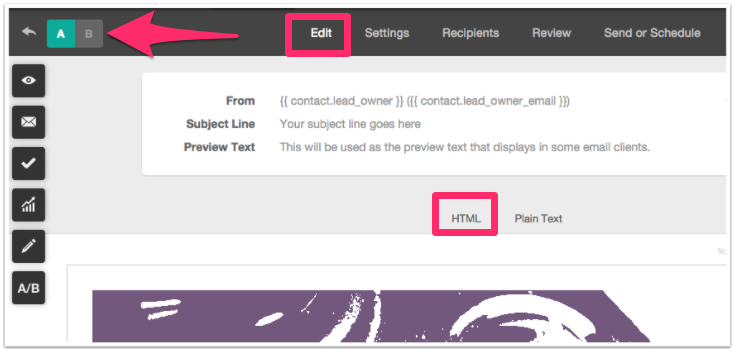

Step #5: Change the variation based on your hypothesis

Now you can edit the two messages.

Remember, the content should be identical.

Change only the one thing you’re testing.

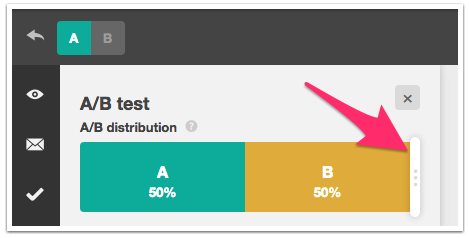

Step #6: Choose the distribution size of the test groups

50/50 is the best distribution.

But if you want to modify it, drag the slide bar to change the distribution ratio.

Step #7: Analyze the results

After you send out the test, HubSpot’s software automatically generates a report.

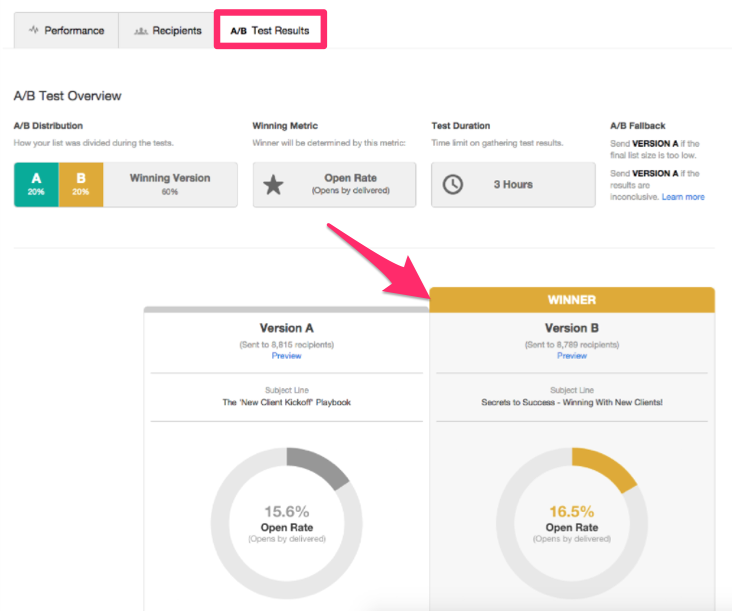

Based on the test we ran, Version B had a higher open rate.

So, that must be the clear winner, right?

Not so fast.

It was higher by less than 1% compared to the control group.

The difference isn’t significant enough to declare a definitive winner.

It’s an inconclusive test.

That’s OK.

These things happen.

If the results are within 1% like in the example above, it’s pretty clear they are inconclusive.

But what about 5%? 10%? Or 15%?

Where do you draw the line?

You need to determine your natural variance.

Run an A/A test email to determine this.

Here’s an example of an A/A test on a website:

The pages are the same.

But the one on the right saw 15% higher conversions.

So that’s the natural variance.

Use this same concept for your email campaign.

Send identical emails to see what the open rates and click-through rates are.

Compare that number against your A/B test results to see if your variance results were meaningful.

Test the send time of each message

Sometimes you need to think outside the box when you’re running these tests.Your subject line and CTA button may not be the problem.

What time of day are you sending your messages?

What day of the week do your emails go out?

You may think Monday morning is a great time because people are starting the week ready to go through emails.

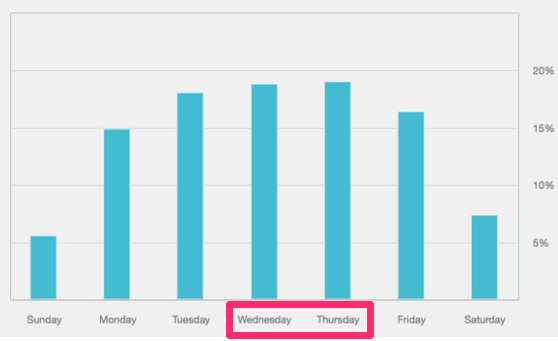

But doing further research suggests otherwise.

It appears more people open emails in the middle of the week.

You can run a split test between Wednesday and Thursday or Tuesday and Thursday to see which days are the best.

Take your test one step further.

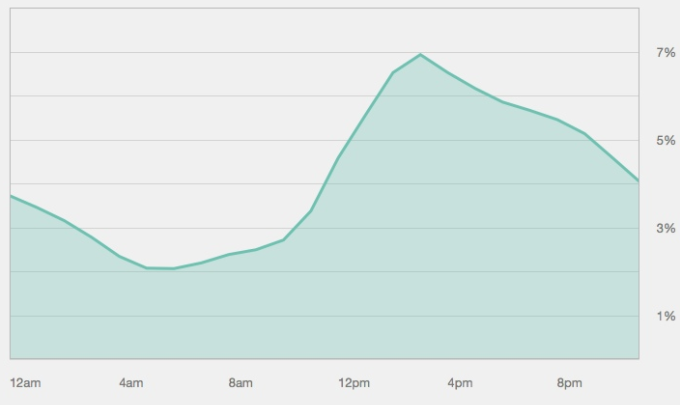

Hypothesize what time you think your subscribers will open and click in your message.

Studies show people are more likely to open an email in the afternoon.

Between 2:00 PM and 5:00 PM is the time when you’ll probably see the most activity.

Take this information into consideration when you’re running an A/B test.

Your opening lines are essential

Earlier we identified the importance of testing your subject line.Let’s take that a step further.

Focus on the first few lines of your message.

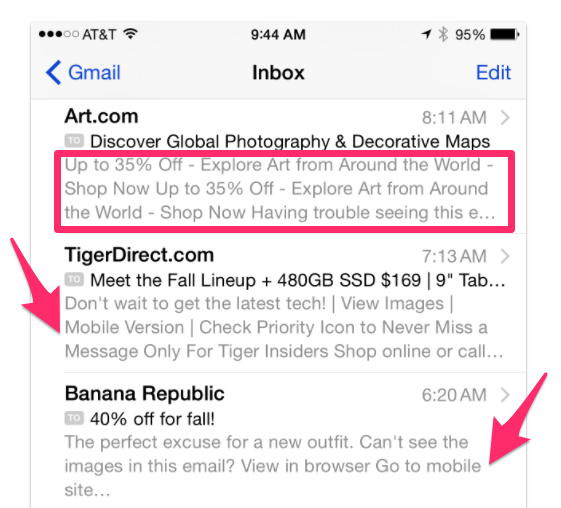

Most email platforms give the recipient a preview of the message underneath the subject.

Here’s what it looks like on a user’s phone in their Gmail account:

Play around with the opening lines of your message.

It’s a great opportunity to run an A/B test.

Look at some of the examples above.

Banana Republic doesn’t mention the offer in the first few lines.

Why?

Because it’s written in the subject line.

It would be redundant if they included that information again in the first sentence.

But if you keep reading, there’s probably room for improvement.

The next part of the message tells you that you can see all the images on their mobile site.

That may not be the most efficient use of their preview space.

There’s one way we can find out for sure.

Run an A/B test.

Changing your opening lines can help improve open rates by up to 45%.

Manually running an A/B test

As I mentioned earlier, not every email marketing platform has an A/B test option built into their service.Other sites besides HubSpot that have an A/B test feature include:

But if you’re happy with your current provider and don’t want to switch for just one additional feature, you can still manually run an A/B test.

Split your list into two groups, and run the test that way.

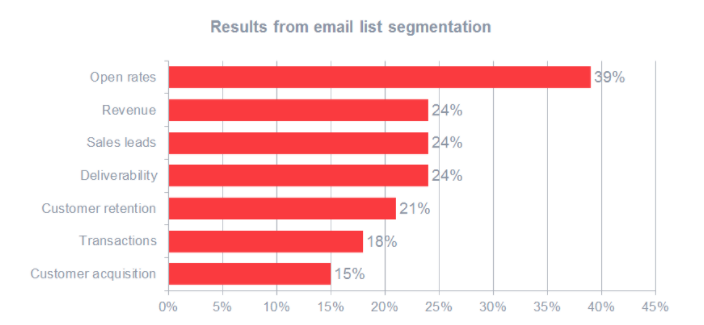

It’s possible you already have your contacts segmented by other metrics.

This can help increase open rates and conversions.

But it’s also an effective method for analyzing your hypothesis.

You’ll have to create two separate campaigns and compare the results, which is completely fine.

You just won’t see the comparison side by side on the same page as we saw in the earlier example.

If you’re doing this manually, always run your tests simultaneously.

Running tests on separate occasions could impact the results based on time, which plays a major factor in the analysis.

Test a large sample size.

This will help ensure your results are more accurate before you jump to definitive conclusions.

Running a manual test does not mean you should test more than one variable at the same time.

Stick to what we outlined earlier, picking a single variation for each test.

Experiment with the design of your email campaigns

Once you have your subject line, opening sentences, and calls to action mastered, it’s time to think about your existing template.You can keep all your content the same, but change the layout.

Here are some examples of different templates from MailChimp:

What do all of these templates have in common?

The word count.

None of these templates give you space to write long paragraphs because it’s not effective.

Keep your message short.

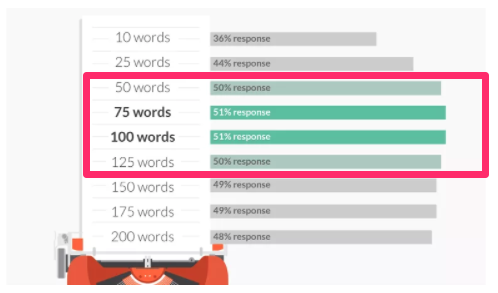

Research from Boomerang suggests that your email should be between 50 and 125 words.

The messages in their test sample got at least a 50% response rate.

While you’re experimenting with template designs, you can also try different images.

Try one large background image with text written over it.

Another option is to include a picture within the content.

Your A/B template test can help determine which method is more effective.

Swapping out one image for another is something else you can test.

For example, if you’re using a picture of a person, test the difference between a male and female.

Conclusion

A/B testing works.If you used these tests to successfully optimize conversions on your website, the same concept could be applied to your email marketing strategy.

Before you get started, come up with a valid hypothesis.

Don’t start changing things without a plan.

Test only one variation at a time.

After you’ve come up with conclusive results for your first test, you can move on to something else.

Try testing your:

- Subject line

- Call-to-action wording

- First few sentences of the message

- Day and time of sending the email

- CTA button placement

- Templates

- Images

If not, it’s no problem.

You can manually run an A/B test by creating two separate groups and two different campaigns.

This is still an effective method.

A/B tests will help increase opens, clicks, and conversions.

Ultimately, this can generate more revenue for your business.

How will you modify the call to action in the first variation of your A/B test?

https://www.quicksprout.com/2017/10/27/how-to-start-ab-testing-your-email-marketing-a-beginners-guide/

No comments:

Post a Comment